Linux - 2

Linux is the best-known and most-used open source operating system.

To Learn

Process Management

- View - Kill Process and other Signals - cd /Proc - Start a Process - Nohup, Pm2, screen, setsid. bg , fg, disown, - Port Binding 127 vs 192 vs 0

File Management

- less - grep - tail - cat"

Nginx

- Install: ppa and source - Configure - User Permission - Locations - Logs

SSH

- Password/ File based logins - Passphrase - ~/.ssh permission - authorized_keys - id_rsa and id_rsa.pub - ssh_config

Java installation

- from ppa - From Source - adding and editing Environment Variables

TOMCAT

- Install and setup - Run - Log files - directories and files - Working”

Exploring /proc File System in Linux

we are going to take a look inside the /proc directory. One misconception that we have to immediately clear up is that the /proc directory is NOT a real File System , in the sense of the term. It is a Virtual File System . Contained within the procfs are information about processes and other system information. It is mapped to /proc and mounted at boot time.

cd /proc

cat /proc/meminfo

quick rundown on /proc’s files:

- /proc/cmdline – Kernel command line information.

- /proc/console – Information about current consoles including tty.

- /proc/devices – Device drivers currently configured for the running kernel.

- /proc/dma – Info about current DMA channels.

- /proc/fb – Framebuffer devices.

- /proc/filesystems – Current filesystems supported by the kernel.

- /proc/iomem – Current system memory map for devices.

- /proc/ioports – Registered port regions for input output communication with device.

- /proc/loadavg – System load average.

- /proc/locks – Files currently locked by kernel.

- /proc/meminfo – Info about system memory (see above example).

- /proc/misc – Miscellaneous drivers registered for miscellaneous major device.

- /proc/modules – Currently loaded kernel modules.

- /proc/mounts – List of all mounts in use by system.

- /proc/partitions – Detailed info about partitions available to the system.

- /proc/pci – Information about every PCI device.

- /proc/stat – Record or various statistics kept from last reboot.

- /proc/swap – Information about swap space.

- /proc/uptime – Uptime information (in seconds).

- /proc/version – Kernel version, gcc version, and Linux distribution installed.

Within /proc’s numbered directories you will find a few files and links. Remember that these directories’ numbers correlate to the PID of the command being run within them. Let’s use an example. On my system, there is a folder name /proc/12:

cd /proc/12

ls

In any numbered directory, you will have a similar file structure. The most important ones, and their descriptions, are as follows:

- cmdline – command line of the process

- environ – environmental variables

- fd – file descriptors

- limits – contains information about the limits of the process

- mounts – related information

You will also notice a number of links in the numbered directory:

- cwd – a link to the current working directory of the process

- exe – link to the executable of the process

- root – link to the work directory of the process

Keeping a process running

NOHUP

A process may not continue to run when you log out or close your terminal. This special case can be avoided by preceding the command you want to run with the nohup command. Also, appending an ampersand (&) will send the process to the background and allow you to continue using the terminal. For example, suppose you want to run myprogram.sh.

nohup myprogram.sh &One nice thing nohup does is return the running process's PID. I'll talk more about the PID next.

PM2

ADVANCED, PRODUCTION PROCESS MANAGER FOR NODE.JS

PM2 is a daemon process manager that will help you manage and keep your application online 24/7

PM2 is a free open source, advanced, efficient and cross-platform production-level process manager for Node.js with a built-in load balancer.

It keeps your apps “alive forever ” with auto restarts and can be enabled to start at system boot, thus allowing for High Availability (HA ) configurations or architectures. Notably, PM2 allows you to run your apps in cluster mode without making any changes in your code (this also depends on the number of CPU cores on your server). It also allows you to easily manage app logs, and so much more.

https://www.tecmint.com/install-pm2-to-run-nodejs-apps-on-linux-server/

screen command in Linux provides the ability to launch and use multiple shell sessions from a single ssh session. When a process is started with ‘screen’, the process can be detached from session & then can reattach the session at a later time. When the session is detached, the process that was originally started from the screen is still running and managed by the screen itself. The process can then re-attach the session at a later time, and the terminals are still there, the way it was left. Syntax:

screen [-opts] [cmd [args]]setsid command in Linux

setsid command in Linux system is used to run a program in a new session. The command will call the fork(2) if already a process group leader. Else, it will execute a program in the current process.

Syntax:

setsid [options] program [arguments]When you run a lengthy process

interactively or in the background, it is attached to the shell. When the shell exits, the process will abort.

Although you can use

nohup

to ensure that a command ignores the hangup signal, which occurs when a user disconnects from the pseudo-terminal (pty), it is not as reliable as

setsid

. nohup is known to time out prematurely. Setsid runs commands in a separate session (that is not attached to your pty) so the commands run to completion even after you log out.

exec command

exec command in Linux is used to execute a command from the bash itself. This command does not create a new process it just replaces the bash with the command to be executed. If the exec command is successful, it does not return to the calling process.

Daemon-izing a Process in Linux - just read. don’t go into details

https://codingfreak.blogspot.com/2012/03/daemon-izing-process-in-linux.html

What is Chkconfig used for?

The Chkconfig command tool allows to configure services start and stop automatically in the /etc/rd. d/init. d scripts through command line.

eg:

Configure the web server to restart if it gets stopped

chkconfig httpd onDisown command

/dev/random

In Unix-like operating systems , /dev/random , /dev/urandom and /dev/arandom are special files that serve as pseudorandom number generators .

In this example, we will start up a couple of jobs running in the background:

cat /dev/random > /dev/null &

ping google.com > /dev/null &jobs -l

Remove All Jobs

To remove all jobs from the job table, use the following command:

disown -aRemove Specific Jobs

If you want to remove a specific job from the job table, use the disown command with the appropriate job ID. The job ID is listed in brackets on the job table:

In our example, if we want to remove the ping command, we need to use the disown command on job 2:

disown %2

Remove Currently Running Jobs

To remove only the jobs currently running, use the following command:

disown -rKeep Jobs Running After You Log Out

Once you exit your system’s terminal, all currently running jobs are automatically terminated. To prevent this, use the disown command with the -h option:

disown -h jobIDIn our example, we want to keep the cat command running in the background. To prevent it from being terminated on exit, use the following command:

disown -h %1After you use the disown command, close the terminal:

exitAny jobs you used the disown -h command on will keep running.

How to check if port is in use on Linux or Unix

https://www.cyberciti.biz/faq/unix-linux-check-if-port-is-in-use-command/

Run any one of the following command on Linux to see open ports:

sudo lsof -i -P -n | grep LISTEN

sudo netstat -tulpn | grep LISTEN

sudo ss -tulpn | grep LISTEN

sudo lsof -i:22 ## see a specific port such as 22 ##

sudo nmap -sTU -O IP-address-Here

strace is a diagnostic, debugging and instructional userspace utility for Linux. It is used to monitor and tamper with interactions between processes and the Linux kernel, which include system calls, signal deliveries, and changes of process state.

Both strace and ltrace are powerful command-line tools for debugging and troubleshooting programs on Linux : Strace captures and records all system calls made by a process as well as the signals received, while ltrace does the same for library calls.

Opening a port on Linux

https://www.journaldev.com/34113/opening-a-port-on-linux

List all open ports

netstat -lntu

eg. let’s open port 4000

just to make sure, let’s ensure that port 4000 (can be anything )is not used, using the netstat or the ss command.

netstat -na | grep :4000

ss -na | grep :4000

Ubuntu has a firewall called ufw, which takes care of these rules for ports and connections, instead of the old iptables firewall. If you are a Ubuntu user, you can directly open the port using ufw

sudo ufw allow 4000

Determining what process is bound to a port

netstat -lnp

How to bind a service to a port in Linux

https://www.fosslinux.com/46759/bind-service-port-linux.htm

Not all system services require an association with a port number, meaning they do not need to open a socket on a network to receive packets. However, if the network services need to communicate with other network processes continuously, a socket is required, making it mandatory for these services to bind to specific ports.

Port numbers make it easy to identify requested services. Their absence implies that a client-to-server request would be unsuccessful because the transport headers associated with these requests will not have port numbers that link them to specific machine services.

A service such as HTTP has a default binding to port 80. This default binding does not imply that the HTTP service can only receive network packets or respond to network requests via port 80. With access to the right config files, you can associate this service with a new custom port. After this successful configuration, accessing the service with the new port number would imply specifying the machine’s IP address or domain name and the new port number as part of its URL definition.

For example, a machine on an HTTP service network that was initially accessed via the IP address http://10.10.122.15 may have a new access URL like http://10.10.122.15:83 if the port number is changed from 80 to a custom port number like 83.

Modifying the /etc/services files

Since we now understand the relationship between network services and ports, any open network connection on a Linux server associates the client machine that opened that connection to a targeted service through a specific port. This active network classifies these ports as “well-known ports” because both the server and the client computers need to know beforehand.

The configuration that binds a service to a port on a Linux machine is defined in the small local database file “/etc/services”. To explore the content of this file structure, you can use the nano command.

File Management

less , grep, tail, cat

Less

command is a Linux utility that can be used to read the contents of a text file one page(one screen) at a time. It has faster access because if file is large it doesn’t access the complete file, but accesses it page by page.

less filename

dmesg | less

dmesg | less -p "failure"The above command tells less to start at first occurrence of pattern “failure” in the file.

dmesg | less -NIt will show output along with line numbers

Grep

grep is a Linux / Unix command-line tool used to search for a string of characters in a specified file. The text search pattern is called a regular expression. When it finds a match, it prints the line with the result. The grep command is handy when searching through large log files.

Note: Grep is case-sensitive. Make sure to use the correct case when running grep commands.

grep phoenix sample2.txtGrep will display every line where there is a match for the word phoenix.

multisearch

grep phoenix sample sample2 sample3

To search all files in the current directory, use an asterisk instead of a filename at the end of a grep command.

In this example, we use nix as a search criterion:

grep nix *Grep allows you to find and print the results for whole words only. To search for the word phoenix in all files in the current directory, append -w to the grep command.

grep -w phoenix *As grep commands are case sensitive, one of the most useful operators for grep searches is -i. Instead of printing lowercase results only, the terminal displays both uppercase and lowercase results. The output includes lines with mixed case entries.

An example of this command:

grep -i phoenix *To include all subdirectories in a search, add the -r operator to the grep command.

grep -r phoenix *You can use grep to print all lines that do not match(inverse grep search) a specific pattern of characters. To invert the search, append -v to a grep command.

To exclude all lines that contain phoenix, enter:

grep -v phoenix sampleThe grep command prints entire lines when it finds a match in a file. To print only those lines that completely match the search string, add the -x option.

grep -x “phoenix number3” *Grep can display the filenames and the count of lines where it finds a match for your word.

Use the -c operator to count the number of matches:

grep -c phoenix *Tail command

shows last 3 lines

tail -3 test.txt

Display Contents of File(cat)

# cat /etc/passwd

displays line number

# cat -n song.txt

What Is Nginx? A Basic Look at What It Is and How It Works

Nginx , pronounced like “engine-ex”, is an open-source web server that, since its initial success as a web server, is now also used as a reverse proxy , HTTP cache, and load balancer.

https://kinsta.com/knowledgebase/what-is-nginx/

With Nginx, one master process can control multiple worker processes. The master maintains the worker processes, while the workers do the actual processing. Because Nginx is asynchronous, each request can be executed by the worker concurrently without blocking other requests.

Some common features seen in Nginx include:

- Reverse proxy with caching

- A reverse proxy server is a type of proxy server that typically sits behind the firewall in a private network and directs client requests to the appropriate backend server . A reverse proxy provides an additional level of abstraction and control to ensure the smooth flow of network traffic between clients and servers.

- IPv6

- Load balancing

- FastCGI support with caching

- FastCGI is a programming interface that can speed up Web applications that use the most popular way to have the Web server call an application, the common gateway interface (CGI).

- WebSockets

- A WebSocket is a persistent connection between a client and server. WebSockets provide a bidirectional, full-duplex communications channel that operates over HTTP through a single TCP/IP socket connection. At its core, the WebSocket protocol facilitates message passing between a client and server.

- Handling of static files, index files, and auto-indexing

- TLS/SSL with SNI(Server name indication)

Why use a reverse proxy?

https://www.loadbalancer.org/blog/why-should-businesses-use-reverse-proxy/

In a computer network, a reverse proxy server acts as a middleman – communicating with the users so the users never interact directly with the origin servers. Serving as a gateway, it sits in front of one or more web servers and forwards client (web browser) requests to those web servers. Web traffic must pass through it before they forward a request to a server to be fulfilled and then return the server’s response.

Reverse proxies make different servers and services appear as one single unit, allowing organizations to hide several different servers behind the same name - making it easier to remove services, upgrade them, add new ones, or roll them back. As a result, the site visitor only sees my-company-123.net and not myweirdinternalservername.my-company-123.net

To sum up, reverse proxy servers can:

- Conceal the characteristics and existence of origin servers

- Ease out takedowns and malware removals

- Carry TLS acceleration hardware, letting them perform TLS encryption in place of secure websites

- Spread the load from incoming requests to each of the servers that supports its own application area

- Layer web servers with basic HTTP access authentication

- Work as web acceleration servers that can cache both dynamic and static content, thus reducing the load on origin servers

- Perform multivariate testing and A/B testing without inserting JavaScript into pages

- Compress content to optimize it and speed up loading times

- Serve clients with dynamically generated pages bit by bit even when they are produced at once, allowing the pages and the program that generates them to be closed, releasing server resources during the transfer time

- Assess incoming requests via a single public IP address, delivering them to multiple web-servers within the local area network

Most businesses host their website’s content management system or shopping cart apps with an external service outside their own network. Instead of letting site visitors know that you’re sending them to a different URL for payment, businesses can conceal that detail using a reverse proxy.

Reverse proxy and load balancers: what’s the correlation?

A reverse proxy is a layer 7 load balancer (or, vice versa) that operates at the highest level applicable and provides for deeper context on the Application Layer protocols such as HTTP. By using additional application awareness, a reverse proxy or layer 7 load balancer has the ability to make more complex and informed load balancing decisions on the content of the message – whether it’s to optimise and change the content (HTTP header manipulation, compression and encryption) and/or monitor the health of applications to ensure reliability and availability. On the other hand, layer 4 load balancers are FAST routers rather than application (reverse) proxies where the client effectively talks directly (transparently) to the backend servers.

All modern load balancers are capable of doing both – layer 4 as well as layer 7 load balancing, by acting either as reverse proxies (layer 7 load balancers) or routers (layer 4 load balancers). An initial tier of layer 4 load balancers can distribute the inbound traffic across a second tier of layer 7 (proxy-based) load balancers. Splitting up the traffic allows the computationally complex work of the proxy load balancers to be spread across multiple nodes. Thus, the two-tiered model serves far greater volumes of traffic than would otherwise be possible and therefore, is a great option for load balancing object storage systems – the demand for which has significantly exploded in the recent years.

Installing Nginx

sudo apt update

sudo apt install nginxDefault page is placed in /var/www/html/

location. You can place your static pages here, or use virtual host and place it other location.

Ubuntu Linux: Start / Restart / Stop Nginx Web Server

sudo service nginx start

sudo service nginx stop

sudo service nginx restart

or

sudo systemctl start nginx

sudo systemctl stop nginx

sudo systemctl restart nginxTo view status of your Nginx server

Use any one of the following command:sudo service status nginx## OR ##sudo systemctl status nginx

/etc/nginx/nginx.conf

A note about reload nginx server

It is also possible to use the following syntax to reload nginx server after you made changes to the config file such as nginx.conf:sudo nginx -s reloadORsudo systemctl reload nginxORsudo service nginx reload

error log

sudo tail -f /var/log/nginx/error.log

It is also possible to use the systemd systemctl and journalctl commands for details on errors:

$ sudo systemctl status nginx.service

$ sudo journalctl -xeSystemctl is a Linux command-line utility used to control and manage systemd and services . You can think of Systemctl as a control interface for Systemd init service, allowing you to communicate with systemd and perform operations. Systemctl is a successor of Init.

https://linuxhint.com/systemctl-utility-linux/

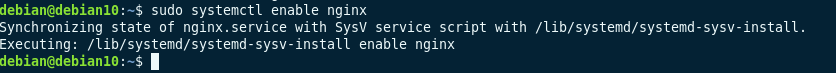

How to Enable Services at Boot

If you want a specific service to run during system startup, you can use the enable command.

For example:

$ sudo systemctl enable nginx

The above command, however, does not enable the service during an active session. To do this, add the –now flag.

$ sudo systemctl enable nginx --now

Allow Nginx Traffic through a Firewall

You can generate a list of the firewall rules using the following command:

sudo ufw app listThis should generate a list of application profiles. On the list, you should see four entries related to Nginx:

Nginx full– opens Port 80 for normal web traffic, and Port 443 for secure encrypted web traffic

Nginx HTTP– Opens Port 80 for normal web traffic

Nginx HTTPS– Opens Port 443 for encrypted web traffic

OpenSSH– This is a configuration for SecureShell operations, which allow you to log into a remote server through a secure, encrypted connection

To allow normal HTTP traffic to your Nginx server, use the Nginx HTTP profile with the following command:

sudo ufw allow ‘Nginx HTTP’To check the status of your firewall, use the following command:

sudo ufw statusIt should display a list of the kind of HTTP web traffic allowed to different services. Nginx HTTP should be listed as ALLOW and Anywhere.

Test Nginx in a Web Browser

Open a web browser, such as Firefox.

Enter your system’s IP address in the address bar or type localhost.

Your browser should display a page welcoming you to Nginx.

Define Server Blocks

Nginx uses a configuration file to determine how it behaves. One way to use the configuration file is to define server blocks, which work similar to an Apache Virtual Host.

Nginx is designed to act as a front for multiple servers, which is done by creating server blocks.

By default, the main Nginx configuration file is located at /etc/nginx/nginx.conf. Server block configuration files are located at /etc/nginx/sites-available.

To view the contents of the default server block configuration file, enter the following command in a terminal:

sudo vi /etc/nginx/sites-available/defaultThis should open the default configuration file in the Vi text editor, which should look something like this:

# Default server configuration

#

server {

listen 80 default_server;

listen [::]:80 default_server;

[...]

root /var/www/html;

# Add index.php to the list if you are using PHP

index index.html index.htm index.nginx-debian.html;

server_name _;

location / {

# First attempt to serve request as file, then

# as directory, then fall back to displaying a 404.

try_files $uri $uri/ =404;

}

[...]

}- The listen commands tell Nginx which ports to look at for traffic

- Default_server defines this as the block to be delivered unless otherwise specified by the client

- Root determines which directory holds the root directory for the website that’s being served

- Server_name allows you to specify a name for a particular server block, which is used in more advanced configurations

- Location allows you to direct the location where Nginx should route traffic

Create a Sample Server Block

Set up an HTML File

Going through a sample configuration is helpful. In a terminal window, enter the following command to create a “test” directory to work with:

sudo mkdir /var/www/exampleCreate and open a basic HTML index file to work as a test webpage:

sudo vi /var/www/example/index.htmlIn the Vi text editor (you can substitute your preferred text editor if you’d like), enter the following:

Welcome to the Example Website!Save the file and exit.

Set up a Simple Server Block

Use the following command to create a new server block file for our Test website:

sudo vi /etc/nginx/sites-available/example.comThis should launch the Vi text editor and create a new server block file.Enter the following lines into the text file:

server {

listen 80;

root /var/www/example;

index index.html;

server_name www.example.com;

}

This tells Nginx to look at the /var/www/example directory for the files to serve, and to use the index.html file we created as the front page for the website.Save the file and exit.

Create a Symbolic Link to Activate Server Block

In the terminal window, enter the following command:

sudo ln –s /s /etc/nginx/sites-available/example.com /etc/nginx/sites-enabledThis creates a link and enables your test website in Nginx. Restart the Nginx service to apply the changes:

sudo systemctl restart nginxStart Testing

In a browser window, visit www.example.com.

Nginx should intercept the request, and display the text we entered in the HTML file.

NGINX Configuration: Understanding Directives

Every NGINX configuration file will be found in the /etc/nginx/ directory, with the main configuration file located in /etc/nginx/nginx.conf .

NGINX configuration options are known as “directives”: these are arranged into groups, known interchangeably as blocks or contexts .

Nginx configuration file locations

https://help.dreamhost.com/hc/en-us/articles/216455077-Nginx-configuration-file-locations

Serving custom content and setting up virtual host

https://ubuntu.com/tutorials/install-and-configure-nginx#1-overview

Creating our own website

Default page is placed in /var/www/html/ location. You can place your static pages here, or use virtual host and place it other location.

Virtual host is a method of hosting multiple domain names on the same server.

Let’s create simple HTML page in /var/www/tutorial/ (it can be anything you want). Create index.html file in this location.

cd /var/www

sudo mkdir tutorial

cd tutorial

sudo "${EDITOR:-vi}" index.html

Paste the following to the index.html file:

<!doctype html>

<html>

<head>

<meta charset="utf-8">

<title>Hello, Nginx!</title>

</head>

<body>

<h1>Hello, Nginx!</h1>

<p>We have just configured our Nginx web server on Ubuntu Server!</p>

</body>

</html>

Save this file. In next step we are going to set up virtual host to make Nginx use pages from this location.

Setting up virtual host

To set up virtual host, we need to create file in /etc/nginx/sites-enabled/ directory.

For this tutorial, we will make our site available on 81 port, not the standard 80 port. You can change it if you would like to.

cd /etc/nginx/sites-enabled

sudo "${EDITOR:-vi}" tutorial

server {

listen 81;

listen [::]:81;

server_name example.ubuntu.com;

root /var/www/tutorial;

index index.html;

location / {

try_files $uri $uri/ =404;

}

}

root is a directory where we have placed our .html file. index is used to specify file available when visiting root directory of site. server_name can be anything you want, because you aren’t pointing it to any real domain by now.

Activating virtual host and testing results

To make our site working, simply restart Nginx service.

sudo service nginx restart

Let’s check if everything works as it should. Open our newly created site in web browser. Remember that we used :81 port.

Adjusting the Firewall

Before testing Nginx, the firewall software needs to be adjusted to allow access to the service. Nginx registers itself as a service with ufw upon installation, making it straightforward to allow Nginx access.

List the application configurations that ufw knows how to work with by typing the following:

sudo ufw app list

Copy

Your output should be a list of the application profiles:

Output

Available applications:

Nginx Full

Nginx HTTP

Nginx HTTPS

OpenSSH

This list displays three profiles available for Nginx:

- Nginx Full: This profile opens both port

80(normal, unencrypted web traffic) and port443(TLS/SSL encrypted traffic)

- Nginx HTTP: This profile opens only port

80(normal, unencrypted web traffic)

- Nginx HTTPS: This profile opens only port

443(TLS/SSL encrypted traffic)

It is recommended that you enable the most restrictive profile that will still allow the traffic you’ve configured. Since you haven’t configured SSL for your server yet in this guide, you’ll only need to allow traffic on port 80.

You can enable this by typing the following:

1. sudo ufw allow 'Nginx HTTP'

Then, verify the change:

sudo ufw status

Copy

You should receive a list of HTTP traffic allowed in the outpu

You can access the default Nginx landing page to confirm that the software is running properly by navigating to your server’s IP address. If you do not know your server’s IP address, you can get it a few different ways.

Try typing the following at your server’s command prompt:

ip addr show eth0 | grep inet | awk '{ print $2; }' | sed 's/\/.*$//'

Copy

You will receive a few lines. You can try each in your web browser to confirm if they work.

An alternative is running the following command, which should generate your public IP address as identified from another location on the internet:

curl -4 icanhazip.com

Copy

When you have your server’s IP address, enter it into your browser’s address bar:

http://your_server_ipYou should receive the default Nginx landing page:

By default, Nginx is configured to start automatically when the server boots. If this is not what you want, you can disable this behavior by typing the following:

sudo systemctl disable nginx

Copy

To re-enable the service to start up at boot, you can type the following:

1. sudo systemctl enable nginx

install nginx ununtu

https://www.digitalocean.com/community/tutorials/how-to-install-nginx-on-ubuntu-18-04

Next, enable the file by creating a link from it to the sites-enabled directory, which Nginx reads from during startup:

sudo ln -s /etc/nginx/sites-available/your_domain /etc/nginx/sites-enabled/

Copy

Two server blocks are now enabled and configured to respond to requests based on their listen and server_name directives (you can read more about how Nginx processes these directives here):

your_domain: Will respond to requests foryour_domainandwww.your_domain.

default: Will respond to any requests on port80that do not match the other two blocks.

To avoid a possible hash bucket memory problem that can arise from adding additional server names, it is necessary to adjust a single value in the /etc/nginx/nginx.conf file. Open the file:

sudo nano /etc/nginx/nginx.conf

Copy

Find the server_names_hash_bucket_size directive and remove the # symbol to uncomment the line:

/etc/nginx/nginx.conf

...

http {

...

server_names_hash_bucket_size 64;

...

}

...

Save and close the file when you are finished.

Next, test to make sure that there are no syntax errors in any of your Nginx files:

sudo nginx -t

Copy

If there aren’t any problems, restart Nginx to enable your changes:

1. sudo systemctl restart nginx

Server Logs

/var/log/nginx/access.log: Every request to your web server is recorded in this log file unless Nginx is configured to do otherwise.

/var/log/nginx/error.log: Any Nginx errors will be recorded in this log.

Compiling and Installing NGINX from Source

https://www.alibabacloud.com/blog/how-to-build-nginx-from-source-on-ubuntu-20-04-lts_597793

update and install dependencies

sudo apt-get install build-essential libpcre3 libpcre3-dev zlib1g zlib1g-dev libssl-dev libgd-dev libxml2 libxml2-dev uuid-dev

Download NGINX Source Code and Configure

We now have all the necessary tools to compile NGINX.

Now, we need to download the NGINX source from their Official website.

Run the following command to download the source code.

wget http://nginx.org/download/nginx-1.20.0.tar.gz

We have now NGINX source code in tarball format. We can extract it by using this command

tar -zxvf nginx-1.20.0.tar.gzGo to the extracted directory by using this command

cd nginx-1.20.0Now we have to use the configure flag for configuring NGINX by using this command.

./configure --prefix=/var/www/html --sbin-path=/usr/sbin/nginx --conf-path=/etc/nginx/nginx.conf --http-log-path=/var/log/nginx/access.log --error-log-path=/var/log/nginx/error.log --with-pcre --lock-path=/var/lock/nginx.lock --pid-path=/var/run/nginx.pid --with-http_ssl_module --with-http_image_filter_module=dynamic --modules-path=/etc/nginx/modules --with-http_v2_module --with-stream=dynamic --with-http_addition_module --with-http_mp4_module

In the above command, we configured our custom path for the NGINX configuration file, access, and Error Log path with some NGINX's module.

Build NGINX & Adding Modules

There are many configuration options available in NGINX, you can use it as per your need. To find all the configuration options available in NGINX by visiting nginx.org.

There are some modules that come pre-installed in NGINX.

Modules Built by Default

There many modules comes with NGINX pre-installed If you don't need a module that is built by default, you can disable it by naming it with the --without-<MODULE-NAME> option on the configure script, for example:

./configure --without-http_empty_gif_moduleCompiling the NGINX source code

After custom configuration complete we can now compile NGINX source code by using this command :

make

This will take quite a bit of time and once that's done install the compiled source code by using this command.

make installStart NGINX by using this command

nginxNow we have successfully installed NGINX. To verify this, check the NGINX version by using this command.

nginx -VOr you can visit your IP to see the holding page NGINX.

http://your-IP-addressStandard NGINX Command-Line Tools

Before we start, however, let's quickly see how to use the standard NGINX command-line tools to execute service signals.

We can confirm that NGINX is running by checking for the process.

ps aux | grep nginxWe can see the master and worker process here.

So with NGINX running in the background, let's see how to send it a stop signal. Using the standard command-line tools.

For example, with NGINX running, we can send the stop signal with NGINX by using this command.

nginx -s stopYou can check the NGINX status by visiting your IP address; you will not see any holding page as NGINX is now stopped or more accurately terminated.

So next, let's add that systemd service.

To enable the service, we're going to have to add a small script, which is the same across operating systems.

Create an Nginx systemd unit file by using nano editor

nano /lib/systemd/system/nginx.serviceand paste this script

[Unit]

Description=The NGINX HTTP and reverse proxy server

After=syslog.target network-online.target remote-fs.target nss-lookup.target

Wants=network-online.target

[Service]

Type=forking

PIDFile=/var/run/nginx.pid

ExecStartPre=/usr/sbin/nginx -t

ExecStart=/usr/sbin/nginx

ExecReload=/usr/sbin/nginx -s reload

ExecStop=/bin/kill -s QUIT $MAINPID

PrivateTmp=true

[Install]

WantedBy=multi-user.targetYou can change the PIDfile location as per your custom configuration path.

Now, save the file by pressing the key CTRL+X, Y, and Enter to save this file.

Start your NGINX by using systemd with this command.

systemctl restart nginxNow you can manage your NGINX by using Systemd.

You can also check the status of NGINX whether it is running or not by using this command.

systemctl status nginxThis gives us a really informative printout of the NGINX server status.

You can change the PIDfile location as per your custom configuration path.

Now, save the file by pressing the key CTRL+X, Y, and Enter to save this file.

Start your NGINX by using systemd with this command.

systemctl restart nginxNow you can manage your NGINX by using Systemd.

You can also check the status of NGINX whether it is running or not by using this command.

systemctl status nginxThis gives us a really informative printout of the NGINX server status.

Enable NGINX on Boot

Now, as we mentioned, the other very useful feature of a systemd service is enabling NGINX to start automatically when the system Boots at the moment, when this machine is shut down or rebooted NGINX will no longer be running.

Obviously not good for a web server in particular.

So to enable start-up on boot, run this command.

systemctl enable nginxSo we get confirmation of a start-up, symlink being created for this service.

We can test this by rebooting the machine.

That's it!

mounting volume docker container

https://www.geeksforgeeks.org/mounting-a-volume-inside-docker-container/

NGINX and PHP-FPM. What my permissions should be?

https://www.getpagespeed.com/server-setup/nginx-and-php-fpm-what-my-permissions-should-be

Why enable user www-data is dangerous?

Everything that runs on a web server runs with the userid = www-data and group = www-data. You've now allowed that userid complete control of your machine. So when there's any small security bug in anything you run on that web server the external hackers get 100% control. That is less than desirable and an enormous red flag to anyone who is in any way serious about security.

Secure Shell (SSH)

SSH, or Secure Shell Protocol, is a remote administration protocol that allows users to access, control, and modify their remote servers over the internet.

The SSH command consists of 3 distinct parts:

ssh {user}@{host}The SSH key command instructs your system that you want to open an encrypted Secure Shell Connection. {user} represents the account you want to access. For example, you may want to access the root user, which is basically synonymous with the system administrator with complete rights to modify anything on the system. {host} refers to the computer you want to access. This can be an IP Address (e.g. 244.235.23.19) or a domain name (e.g. www.xyzdomain.com).

When you hit enter, you will be prompted to enter the password for the requested account. When you type it in, nothing will appear on the screen, but your password is, in fact being transmitted. Once you’re done typing, hit enter once again. If your password is correct, you will be greeted with a remote terminal window.

Configure Password-Based SSH Authentication or How to Enable/Disable password based authentication for SSH access to server

A password authentication against SSH isn’t bad but creating a long and complicated password may also encourage you to store it an unsecured manner. Using encryption keys to authenticate SSH connection is a more secure alternative.

enable :

passwd

vim /etc/ssh/sshd_config

PasswordAuthentication yes

/etc/init.d/sshd restart

disable:

vim /etc/ssh/sshd_config

PasswordAuthentication no

service sshd restart

https://www.ssh.com/academy/ssh/sshd_config

SSH passphrase

A passphrase is similar to a password. However, a password generally refers to something used to authenticate or log into a system. A password generally refers to a secret used to protect an encryption key. Commonly, an actual encryption key is derived from the passphrase and used to encrypt the protected resource

Adding or changing a passphrase

You can change the passphrase for an existing private key without regenerating the keypair by typing the following command:

$ ssh-keygen -p -f ~/.ssh/id_ed25519

> Enter old passphrase:[Type old passphrase]

> Key has comment 'your_email@example.com'

> Enter new passphrase (empty for no passphrase):[Type new passphrase]

> Enter same passphrase again:[Repeat the new passphrase]

> Your identification has been saved with the new passphrase.https://www.cyberciti.biz/faq/howto-ssh-changing-passphrase/

Listing OpenSSH private and public ssh keys

You can use the ls -l $HOME/.ssh/ command to see the following files:

- id_dsa.* : DSA keys for authentication

- id_rsa.* : RSA keys authentication identity of the user

- id_ed25519.* : EdDSA keys authenticationr

For example:ls -l ~/.ssh/ls -l ~/.ssh/id_*

Typically private key names start with id_rsa or id_ed25519 or id_dsa, and they are protected with a passphrase. However, users can name their keys anything. In the above example, for my intel NUC, I named RSA keys as follows:

- intel_nuc_debian – Private RSA key

- intel_nuc_debian.pub – Public RSA key

How to change a ssh passphrase for private key

The procedure is as follows for OpenSSH to change a passphrase:

- Open the terminal application

- To change the passphrase for default SSH private key:

ssh-keygen -p

- First, enter the old passphrase and then type a new passphrase two times.

- You can specify the filename of the key file:

ssh-keygen -p -f ~/.ssh/intel_nuc_debian

Let us see all examples for changing a passphrase with ssh-keygen command in details.

OpenSSH Change a Passphrase ssh-keygen command

The -p option requests changing the passphrase of a private key file instead of creating a new private key. The program will prompt for the file containing the private key, for the old passphrase, and twice for the new passphrase. Use -f {filename} option to specifies the filename of the key file. For example, change directory to $HOME/.ssh. Open the Terminal app and then type the cd command:$ cd ~/.ssh/To change DSA passphrase, enter:$ ssh-keygen -f id_dsa -pFor ed25519 key:$ ssh-keygen -f id_ed25519 -pLet us change RSA passphrase, enter:$ ssh-keygen -f id_rsa -p

Removing a Passphrase with ssh-keygen

The syntax is same but to remove the existing passphrase, hit Enter key twice at the steps to enter the new one and then confirm it:ssh-keygen -f ~/.ssh/id_rsa -pssh-keygen -f ~/.ssh/aws_cloud_automation -p

However, you can state empty passphrase by abusing the

-N

option as follows to save hitting the

Enter

key twice:

ssh-keygen -p -N ""

ssh-keygen -f ~/.ssh/aws_cloud_automation -p -N ""Permissions for .ssh folder and key files

https://www.frankindev.com/2020/11/26/permissions-for-.ssh-folder-and-key-files/

Typically, the permissions need to be1:

.sshdirectory:700 (drwx------)

- public key (

.pubfiles):644 (-rw-r--r--)

- private key (

id_rsa):600 (-rw-------)

- lastly your home directory should not be writeable by the group or others (at most

755 (drwxr-xr-x))

Use the following commands to change the permissions2:

sudo chmod 700 ~/.ssh

sudo chmod 644 ~/.ssh/id_example.pub

sudo chmod 600 ~/.ssh/id_example

Summary based on the ssh man page (to show by man ssh)34:

+------------------------+-------------------------------------+-------------+-------------+

| Directory or File | Man Page | Recommended | Mandatory |

| | | Permissions | Permissions |

+------------------------+-------------------------------------+-------------+-------------+

| ~/.ssh/ | There is no general requirement to | 700 | |

| | keep the entire contents of this | | |

| | directory secret, but the | | |

| | recommended permissions are | | |

| | read/write/execute for the user, | | |

| | and not accessible by others. | | |

+------------------------+-------------------------------------+-------------+-------------+

| ~/.ssh/authorized_keys | This file is not highly sensitive, | 600 | |

| | but the recommended permissions are | | |

| | read/write for the user, and not | | |

| | accessible by others | | |

+------------------------+-------------------------------------+-------------+-------------+

| ~/.ssh/config | Because of the potential for abuse, | | 600 |

| | this file must have strict | | |

| | permissions: read/write for the | | |

| | user, and not accessible by others. | | |

| | It may be group-writable provided | | |

| | that the group in question contains | | |

| | only the user. | | |

+------------------------+-------------------------------------+-------------+-------------+

| ~/.ssh/identity | These files contain sensitive data | | 600 |

| ~/.ssh/id_dsa | and should be readable by the user | | |

| ~/.ssh/id_rsa | but not accessible by others | | |

| | (read/write/execute) | | |

+------------------------+-------------------------------------+-------------+-------------+

| ~/.ssh/identity.pub | Contains the public key for | 644 | |

| ~/.ssh/id_dsa.pub | authentication. These files are | | |

| ~/.ssh/id_rsa.pub | not sensitive and can (but need | | |

| | not) be readable by anyone. | | |

+------------------------+-------------------------------------+-------------+-------

fail2ban

Fail2ban is an intrusion prevention software framework that protects computer servers from brute-force attacks. Written in the Python programming language, it is able to run on POSIX systems that have an interface to a packet-control system or firewall installed locally, for example, iptables or TCP Wrapper.

What is fail2ban for SSH?

Fail2Ban is an intrusion prevention framework written in Python that protects Linux systems and servers from brute-force attacks. You can setup Fail2Ban to provide brute-force protection for SSH on your server. This ensures that your server is secure from brute-force attacks

Authorized keys or How To Configure SSH Key-Based Authentication on a Linux Server

Step 1 — Creating SSH Keys

ssh-keygen

Step 2 — Copying an SSH Public Key to Your Server

Copying Your Public Key Using ssh-copy-id

ssh-copy-id username@remote_host

Copying Your Public Key Using SSH

If you do not have ssh-copy-id available, but you have password-based SSH access to an account on your server, you can upload your keys using a conventional SSH method.

We can do this by outputting the content of our public SSH key on our local computer and piping it through an SSH connection to the remote server. On the other side, we can make sure that the ~/.ssh directory exists under the account we are using and then output the content we piped over into a file called authorized_keys within this directory.

We will use the >> redirect symbol to append the content instead of overwriting it. This will let us add keys without destroying previously added keys.

cat ~/.ssh/id_rsa.pub | ssh username@remote_host "mkdir -p ~/.ssh && cat >> ~/.ssh/authorized_keys”

Copying Your Public Key Manually

If you do not have password-based SSH access to your server available, you will have to do the above process manually.

The content of your id_rsa.pub file will have to be added to a file at ~/.ssh/authorized_keys on your remote machine somehow.

To display the content of your id_rsa.pub key, type this into your local computer:

1. cat ~/.ssh/id_rsa.pub

Access your remote host using whatever method you have available. This may be a web-based console provided by your infrastructure provider.

Once you have access to your account on the remote server, you should make sure the ~/.ssh directory is created. This command will create the directory if necessary, or do nothing if it already exists:

mkdir -p ~/.ssh

Copy

Now, you can create or modify the authorized_keys file within this directory. You can add the contents of your id_rsa.pub file to the end of the authorized_keys file, creating it if necessary, using this:

echopublic_key_string >> ~/.ssh/authorized_keys

Copy

In the above command, substitute the public_key_string with the output from the cat ~/.ssh/id_rsa.pub command that you executed on your local system. It should start with ssh-rsa AAAA... or similar.

If this works, you can move on to test your new key-based SSH authentication.

Step 3 — Authenticating to Your Server Using SSH Keys

The process is mostly the same:

1. ssh username@remote_host

Step 4 — Disabling Password Authentication on your Server

If you were able to login to your account using SSH without a password, you have successfully configured SSH key-based authentication to your account. However, your password-based authentication mechanism is still active, meaning that your server is still exposed to brute-force attacks.

Before completing the steps in this section, make sure that you either have SSH key-based authentication configured for the root account on this server, or preferably, that you have SSH key-based authentication configured for an account on this server with sudo access. This step will lock down password-based logins, so ensuring that you will still be able to get administrative access is essential.

Once the above conditions are true, log into your remote server with SSH keys, either as root or with an account with sudo privileges. Open the SSH daemon’s configuration file:

sudo nano /etc/ssh/sshd_config

Copy

Inside the file, search for a directive called PasswordAuthentication. This may be commented out. Uncomment the line by removing any # at the beginning of the line, and set the value to no. This will disable your ability to log in through SSH using account passwords:

/etc/ssh/sshd_config

PasswordAuthentication noSave and close the file when you are finished. To actually implement the changes we just made, you must restart the service.

On most Linux distributions, you can issue the following command to do that:

sudo systemctl restart ssh

Copy

After completing this step, you’ve successfully transitioned your SSH daemon to only respond to SSH keys.

What's the difference between id_rsa.pub and id_dsa.pub?

id_rsa.pub and id_dsa.pub are the public keys for id_rsa and id_dsa.

If you are asking in relation to SSH, id_rsa is an RSA key and can be used with the SSH protocol 1 or 2, whereas id_dsa is a DSA key and can only be used with SSH protocol 2.

What is difference between Id_rsa and Id_rsa pub?

In the context of ssh and related software, id_rsa is your RSA *private* key, used to sign and authenticate your connection to a remote host.

id_rsa.pub is your RSA *public* key, which, when supplied the remote host (via an ‘authorized keys’ file, publishing it in the DNS, or other means) allows the host to authenticate your connection as being originated by you, and decide whether or not to accept it as a result.

Don’t get the two confused, and maintain tight control over id_rsa, because anybody who gets that can use it to impersonate you.

What is the difference between ssh_config and sshd_config

When you work on a Linux system, you play with SSH program on daily basis. You will be required to configure ssh client or ssh daemon on your Linux box to make it work properly. In each Linux distribution (Debian, Redhat and so on), there are two configuration files ssh_config and sshd_config for SSH program. What is the difference between ssh_config and sshd_config?

ssh_config: configuration file for the ssh client on the host machine you are running. For example, if you want to ssh to another remote host machine, you use a SSH client. Every settings for this SSH client will be using ssh_config, such as port number, protocol version and encryption/MAC algorithms.

sshd_config: configuration file for the sshd daemon (the program that listens to any incoming connection request to the ssh port) on the host machine. That is to say, if someone wants to connect to your host machine via SSH, their SSH client settings must match your sshd_config settings in order to communicate with you, such as port number, version and so on.

sshd_config is the configuration file for the OpenSSH server.

https://www.ssh.com/academy/ssh/config ssh_config is the configuration file for the OpenSSH client

How ssh_config Works

The ssh client reads configuration from three places in the following order:

- System wide in

/etc/ssh/ssh_config

- User-specific in your home directory

~/.ssh/ssh_config

- Command line flags supplied to

sshdirectly

What is PPA?

PPA stands for Personal Package Archive. The PPA allows application developers and Linux users to create their own repositories to distribute software. With PPA, you can easily get newer software version or software that are not available via the official Ubuntu repositories

Concept of repositories and package management

A repository is a collection of files that has information about various software, their versions and some other details like the checksum. Each Ubuntu version has its own official set of four repositories:

- Main – Canonical-supported free and open-source software.

- Universe – Community-maintained free and open-source software.

- Restricted – Proprietary drivers for devices.

- Multiverse – Software restricted by copyright or legal issues.

You can see such repositories for all Ubuntu versions here. You can browse through them and also go to the individual repositories. For example, Ubuntu 16.04 main repository can be found here.

So basically it’s a web URL that has information about the software. How does your system know where are these repositories?

This information is stored in the sources.list file in the directory /etc/apt. If you look at its content, you’ll see that it has the URL of the repositories. The lines with # at the beginning are ignored

How to use PPA? How does PPA work?

https://itsfoss.com/ppa-guide/

PPA, as I already told you, means Personal Package Archive. Mind the word ‘Personal’ here. That gives the hint that this is something exclusive to a developer and is not officially endorsed by the distribution.

Ubuntu provides a platform called Launchpad that enables software developers to create their own repositories. An end user i.e. you can add the PPA repository to your sources.list and when you update your system, your system would know about the availability of this new software and you can install it using the standard sudo apt install command like this.

sudo add-apt-repository ppa:dr-akulavich/lighttable

sudo apt-get update

sudo apt-get install lighttable-installerTo summarize:

- sudo add-apt-repository <PPA_info> <– This command adds the PPA repository to the list.

- sudo apt-get update <– This command updates the list of the packages that can be installed on the system.

- sudo apt-get install <package_in_PPA> <– This command installs the package.

Basically, when you add a PPA using add-apt-repository, it will do the same action as if you manually run these commands:

deb http://ppa.launchpad.net/dr-akulavich/lighttable/ubuntu YOUR_UBUNTU_VERSION_HERE main

deb-src http://ppa.launchpad.net/dr-akulavich/lighttable/ubuntu YOUR_UBUNTU_VERSION_HERE mainThe above two lines are the traditional way to add any repositories to your sources.list. But PPA does it automatically for you, without wondering about the exact repository URL and operating system version.

One important thing to note here is that when you use PPA, it doesn’t change your original sources.list. Instead, it creates two files in /etc/apt/sources.list.d directory, a list and a back up file with suffix ‘save’.

“add-apt-repository command not found” Error

The add-apt-repository command is not installed by default. If you try to run this command you will get the “add-apt-repository command not found” error. If you get this error you should install this tool which is described in the following step.

Install add-apt-repository Command

The add-apt-repository command is provided with the package named software-properties-common. So we will install this package like below.

sudo apt install software-properties-commoninstall Java

simplest :

The Ubuntu repository offers two (2), open-source Java packages, Java Development Kit (Open JDK) and Java Runtime Environment (Open JRE). You use JRE for running Java-based applications, while JDK is for developing and programming with Java.

sudo apt update

Then you need to check if Java is already installed:

java -version

Run the following command to install OpenJDK:

sudo apt install default-jre

This command installs the Java Runtime Environment (JRE). It allows you to run almost any Java software.

Now check the Java version:

java -version

To compile and run some specific Java programs in addition to the JRE, you may need the Java Development Kit (JDK). To install the JDK, run the following command, which also installs the JRE:

sudo apt install default-jdk

This command installs the Java Development Kit (JDK).

Now check JDK version with command:

javac -version

JDK is installed!

java installation PPA

https://phoenixnap.com/kb/how-to-install-java-ubuntu

Install Oracle Java 11

To download the official Oracle JDK, you first need to download a third-party repository.

We include instructions for installations from 2 (two) different package repositories. You can decide from which one you prefer to download.

Option 1: Download Oracle Java from Webupd8 PPA

1. First, add the required package repository by typing:

sudo add-apt-repository ppa:webupd8team/javaHit Enter when prompted.

2. Make sure to update your system before initiating any installation:

sudo apt update3. Now, you can install Java 11, the latest LTS version:

sudo apt install oracle-java11-installer4. Optionally, you can set this Java version as the default with the following command:

sudo apt install oracle-java11-set-defaultOption 2: Download Oracle Java from Linux Uprising PPA

1. Before adding the new repository, install the required packages if you do not have them on your system yet:

sudo apt install software-properties-common2. Next, add the repository with the following command:

sudo add-apt-repository ppa:linuxuprising/java3. Update the package list before installing any new software with:

sudo apt update4. Then, download and install the latest version of Oracle Java (version number 11):

sudo apt install oracle-java11-installerInstall Specific Version of Java

If for some reason you do not wish to install the default or latest version of Java, you can specify the version number you prefer.

Install Specific Version of OpenJDK

You may decide to use Open JDK 8, instead of the default OpenJDK 11.

To do so, open the terminal and type in the following command:

sudo apt install openjdk-8-jdkVerify the version of java installed with the command:

java –versionInstall Specific Version of Oracle Java

When you download the Oracle Java packages from a third-party repository, you have to type out the version number as part of the code.

Therefore, if you want other versions of Java Oracle on your system, change that number accordingly.

The command for installing Oracle JDK is the following (the symbol # representing the Java version):

sudo apt install oracle-java#-installerFor instance, if you want to install Java 10, use the command:

sudo apt install oracle-java10-installerHow to Set Default Java Version

As you can have multiple versions of Java installed on your system, you can decide which one is the default one.

First, run a command that shows all the installed versions on your computer:

sudo update-alternatives --config javaThe image above shows that there are two alternatives on this system. These choices are represented by numbers 1 (Java 11) and 2 (Java 8), while the 0 refers to the current default version.

As the output instructs, you can change the default version if you type its associated number (in this case, 1 or 2) and press Enter.

How to Set JAVA_HOME Environment Variable

The JAVA_HOME environment variable determines the location of your Java installation. The variable helps other applications access Java’s installation path easily.

1. To set up the JAVA_HOME variable, you first need to find where Java is installed. Use the following command to locate it:

sudo update-alternatives --config javaThe Path section shows the locations, which are in this case:

- /usr/lib/jvm/java-11-openjdk-amd64/bin/java (where OpenJDK 11 is located)

- /usr/lib/jvm/java-8-openjdk-amd64/jre/bin/java (where OpenJDK 8 is located)

2. Once you see all the paths, copy one of your preferred Java version.

3. Then, open the file /etc/environment with any text editor. In this example, we use Nano:

nano /etc/environment4. At the end of the file, add a line which specifies the location of JAVA_HOME in the following manner:

JAVA_HOME=”/your/installation/path/”How to Uninstall Java on Ubuntu

In case you need to remove any of the Java packages installed, use the apt remove command.

To remove Open JDK 11, run the command:

sudo apt remove default-jdkTo uninstall OpenJDK 8:

sudo apt remove openjdk-8-jdk

How to Set Environment Variables in Linux

nano /etc/environment/

Setting an Environment Variable

To set an environment variable the export command is used. We give the variable a name, which is what is used to access it in shell scripts and configurations and then a value to hold whatever data is needed in the variable.

export NAME=VALUEFor example, to set the environment variable for the home directory of a manual OpenJDK 11 installation, we would use something similar to the following.

export JAVA_HOME=/opt/openjdk11To output the value of the environment variable from the shell, we use the echo command and prepend the variable’s name with a dollar ($) sign.

echo $JAVA_HOMEAnd so long as the variable has a value it will be echoed out. If no value is set then an empty line will be displayed instead.

Unsetting an Environment Variable

To unset an environment variable, which removes its existence all together, we use the unset command. Simply replace the environment variable with an empty string will not remove it, and in most cases will likely cause problems with scripts or application expecting a valid value.

To following syntax is used to unset an environment variable

unset VARIABLE_NAMEFor example, to unset the JAVA_HOME environment variable, we would use the following command.

unset JAVA_HOMEListing All Set Environment Variables

To list all environment variables, we simply use the set command without any arguments.

setPersisting Environment Variables for a User

When an environment variable is set from the shell using the export command, its existence ends when the user’s sessions ends. This is problematic when we need the variable to persist across sessions.

To make an environment persistent for a user’s environment, we export the variable from the user’s profile script.

- Open the current user’s profile into a text editor

vi ~/.bash_profile

- Add the export command for every environment variable you want to persist.

export JAVA_HOME=/opt/openjdk11

- Save your changes.

Adding the environment variable to a user’s bash profile alone will not export it automatically. However, the variable will be exported the next time the user logs in.

To immediately apply all changes to bash_profile, use the source command.

source ~/.bash_profileExport Environment Variable

Export is a built-in shell command for Bash that is used to export an environment variable to allow new child processes to inherit it.

To export a environment variable you run the export command while setting the variable.

export MYVAR="my variable value"We can view a complete list of exported environment variables by running the export command without any arguments.

exportSHELL=/bin/zsh

SHLVL=1

SSH_AUTH_SOCK=/private/tmp/com.apple.launchd.1pB5Pry8Id/Listeners

TERM=xterm-256color

TERM_PROGRAM=vscode

TERM_PROGRAM_VERSION=1.48.2To view all exported variables in the current shell you use the -p flag with export.

export -pSetting Permanent Global Environment Variables for All Users

A permanent environment variable that persists after a reboot can be created by adding it to the default profile. This profile is loaded by all users on the system, including service accounts.

All global profile settings are stored under /etc/profile. And while this file can be edited directory, it is actually recommended to store global environment variables in a directory named /etc/profile.d, where you will find a list of files that are used to set environment variables for the entire system.

- Create a new file under /etc/profile.d to store the global environment variable(s). The name of the should be contextual so others may understand its purpose. For demonstrations, we will create a permanent environment variable for HTTP_PROXY.

sudo touch /etc/profile.d/http_proxy.sh

- Open the default profile into a text editor.

sudo vi /etc/profile.d/http_proxy.sh

- Add new lines to export the environment variables

export HTTP_PROXY=http://my.proxy:8080export HTTPS_PROXY=https://my.proxy:8080export NO_PROXY=localhost,::1,.example.com

- Save your changes and exit the text editor

https://www.ibm.com/docs/sl/b2b-integrator/5.2?topic=installation-setting-java-variables-in-linux

TOMCAT

What is Tomcat used for?

It is mainly used to provide the foundation for hosting Java servlets. The Apache Tomcat works in the center while Java Server Pages and Servlet produce the dynamic pages. It is one of the server-side programming languages that facilitate the developer to run and perform independent dynamic content creation.

Benefits of Apache Tomcat

- Tomcat is a quick and easy way to run your applications in Ubuntu. It provides quick loading and helps run a server more efficiently

- Tomcat contains a suite of comprehensive, built-in customization choices which enable its users to work flexibly

- Tomcat is a free, open-source application. It offers great customization through access to the code

- Tomcat offers its users an extra level of security

- Thanks to its stability, even if you face issues in Tomcat, it doesn’t stop the rest of the server from working’

- Apache Tomcat is the most widely adopted application and web server in production today.

Installing tomcat

https://www.hostinger.in/tutorials/how-to-install-tomcat-on-ubuntu/

step 1 : install java

step 2 : create tomcat user

Create Tomcat User

For security, you should not use Tomcat without a unique user. This will make the install of Tomcat on Ubuntu easier. Create a new tomcat group that will run the service:

sudo groupadd tomcatNow, the next procedure is to create a new tomcat user. Create user members of the Tomcat group with a home directory opt/tomcat for running the Tomcat service:

sudo useradd -s /bin/false -g tomcat -d /opt/tomcat tomcat

When /sbin/nologin is set as the shell, if user with that shell logs in, they'll get a polite message saying 'This account is currently not available.' This message can be changed with the file /etc/nologin.txt.

/bin/false is just a binary that immediately exits, returning false, when it's called, so when someone who has false as shell logs in, they're immediately logged out when false exits. Setting the shell to /bin/true has the same effect of not allowing someone to log in but false is probably used as a convention over true since it's much better at conveying the concept that person doesn't have a shell.

follow this:

https://www.hostinger.in/tutorials/how-to-install-tomcat-on-ubuntu/

Failed to start tomcat: Unit tomcat.service not found

https://askubuntu.com/questions/1255747/failed-to-start-tomcat-unit-tomcat-service-not-found

The "Permission denied" error for the logs directory most likely means that the OS user running the Tomcat process does not have write permission on that directory.

Assuming you are running Tomcat with user "tomcat7", try setting the ownership and filesystem permissions of the logs directory, e.g.:

sudo chown -R tomcat7:tomcat7 /usr/share/tomcat7/logs

sudo chmod -R u+rw /usr/share/tomcat7/logs

If you are running Tomcat with a different OS user, replace tomcat7:tomcat7 by the username and primary group of that user, respectively.

Step 3: Install Tomcat on Ubuntu

The best way to install Tomcat 9 on Ubuntu is to download the latest binary release from the Tomcat 9 downloads page and configure it manually. If the version is not 9.0.60 or it’s the latest version, then follow the latest stable version. Just copy the link of the core tar.gz file under the Binary Distributions section.

Now, change to the /tmp directory on your server to download the items which you won’t need after extracting the Tomcat contents:

cd /tmpTo download from the copied link (from Tomcat website), use the following curl command:

cucurl -O https://dlcdn.apache.org/tomcat/tomcat-9/v9.0.63/bin/apache-tomcat-9.0.63.tar.gzStep 4: Update Permissions

Now that you finished the install of Tomcat on Ubuntu, you need to set up the Tomcat user to have full access to the Tomcat installation. This user needs to have access to the directory. Follow the steps below:

sudo mkdir /opt/tomcatcd /opt/tomcatsudo tar xzvf /tmp/apache-tomcat-9.0.*tar.gz -C /opt/tomcat --strip-components=1Now, give the Tomcat group ownership over the entire installation directory with the chgrp command:

sudo chgrp -R tomcat /opt/tomcatNext, you need to give the Tomcat user access to the conf directory to view its contents and execute access to the directory itself:

sudo chmod -R g+r confsudo chmod g+x confMake the Tomcat user the owner of the web apps, work, temp, and logs directories:

sudo chown -R tomcat webapps/ work/ temp/ logs/Step5: Create a systemd Unit File

We will need to create a new unit file to run Tomcat as a service. Open your text editor and create a file name tomcat.service in the /etc/systemd/system/:

sudo nano /etc/systemd/system/tomcat.serviceNext, paste the following configuration:

[Unit]

Description=Apache Tomcat Web Application Container

After=network.target

[Service]

Type=forking

Environment=JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-amd64/jre

Environment=CATALINA_PID=/opt/tomcat/temp/tomcat.pid

Environment=CATALINA_Home=/opt/tomcat

Environment=CATALINA_BASE=/opt/tomcat

Environment=’CATALINA_OPTS=-Xms512M -Xmx1024M -server -XX:+UseParallelGC’

Environment=’JAVA_OPTS.awt.headless=true -Djava.security.egd=file:/dev/v/urandom’

ExecStart=/opt/tomcat/bin/startup.sh

ExecStop=/opt/tomcat/bin/shutdown.sh

User=tomcat

Group=tomcat

UMask=0007

RestartSec=10

Restart=always

[Install]

WantedBy=multi-user.targetSave and close the file after finishing the given commands above.

Next, Notify the system that you have created a new file by issuing the following command in the command line:

sudo systemctl daemon-reloadThe following commands will allow you to execute the Tomcat service:

cd /opt/tomcat/binsudo ./startup.sh runStep6: Adjust the Firewall

It is essential to adjust the firewall so the requests get to the service. Tomcat uses port 8080 to accept conventional requests. Allow traffic to that port by using UFW:

sudo ufw allow 8080Follow the command below to access the splash page by going to your domain or IP address followed by :8080 in a web browser – http://IP:8080

Step 7: Configure the Tomcat Web Management Interface

Follow the command below to add a login to your Tomcat user and edit the tomcat-users.xml file:

sudo nano /opt/tomcat/conf/tomcat-users.xmlNow, define the user who can access the files and add username and passwords:

tomcat-users.xml — Admin User

<tomcat-users . . .>

<tomcat-users . . .>

<user username="admin" password="password" roles="manager-gui,admin-gui"/>

</tomcat-users>For the Manager app, type:

sudo nano /opt/tomcat/webapps/manager/META-INF/context.xmlFor the Host Manager app, type:

sudo nano /opt/tomcat/webapps/host-manager/META-INF/context.xmlTo restart the Tomcat service and view the effects:

sudo systemctl restart tomcatStep 8: Access the Online Interface

Now that you already have a user, you can access the web management interface in a browser. Once again, you can access the interface by providing your server’s domain name or IP address followed by port 8080 in your browser – http://server_domain_or_IP:8080

Let’s take a look at the Manager App, accessible via the link – http://server_domain_or_IP:8080/manager/html.

Make sure that you entered the account credentials to the tomcat-users.xml file.

We use the Web Application Manager to manage our Java applications. You can Begin, Stop, Reload, Deploy, and Undeploy all apps here. Lastly, it provides data about your server at the bottom of the page.

Now let’s look at the Host Manager, accessible via http://server_domain_or_IP:8080/host-manager/html/

From the Virtual Host Manager page, you can also add new virtual hosts that follow your application form’s guidelines

How To Use Rsync to Sync Local and Remote Directories

Rsync, which stands for remote sync, is a remote and local file synchronization tool. It uses an algorithm to minimize the amount of data copied by only moving the portions of files that have changed.

In this tutorial, we’ll define Rsync, review the syntax when using rsync, explain how to use Rsync to sync with a remote system, and other options available to you.

Rsync is a very flexible network-enabled syncing tool. Due to its ubiquity on Linux and Unix-like systems and its popularity as a tool for system scripts, it’s included on most Linux distributions by default.

Understanding Rsync Syntax

The syntax for rsync operates similar to other tools, such as ssh, scp, and cp.

First, change into your home directory by running the following command:

cd ~

Copy

Then create a test directory:

mkdir dir1

Copy

Create another test directory:

mkdir dir2

Copy

Now add some test files:

touch dir1/file{1..100}

Copy

There’s now a directory called dir1 with 100 empty files in it. Confirm by listing out the files:

ls dir1

Copy

Output

file1 file18 file27 file36 file45 file54 file63 file72 file81 file90

file10 file19 file28 file37 file46 file55 file64 file73 file82 file91

file100 file2 file29 file38 file47 file56 file65 file74 file83 file92

file11 file20 file3 file39 file48 file57 file66 file75 file84 file93

file12 file21 file30 file4 file49 file58 file67 file76 file85 file94

file13 file22 file31 file40 file5 file59 file68 file77 file86 file95

file14 file23 file32 file41 file50 file6 file69 file78 file87 file96

file15 file24 file33 file42 file51 file60 file7 file79 file88 file97

file16 file25 file34 file43 file52 file61 file70 file8 file89 file98

file17 file26 file35 file44 file53 file62 file71 file80 file9 file99

You also have an empty directory called dir2. To sync the contents of dir1 to dir2 on the same system, you will run rsync and use the -r flag, which stands for “recursive” and is necessary for directory syncing:

rsync -r dir1/ dir2

Copy

Another option is to use the -a flag, which is a combination flag and stands for “archive”. This flag syncs recursively and preserves symbolic links, special and device files, modification times, groups, owners, and permissions. It’s more commonly used than -r and is the recommended flag to use. Run the same command as the previous example, this time using the -a flag:

rsync -a dir1/ dir2

Copy

Please note that there is a trailing slash (/) at the end of the first argument in the syntax of the the previous two commands and highlighted here:

rsync -a dir1/ dir2

Copy

This trailing slash signifies the contents of dir1. Without the trailing slash, dir1, including the directory, would be placed within dir2. The outcome would create a hierarchy like the following:

~/dir2/dir1/[files]

Another tip is to double-check your arguments before executing an rsync command. Rsync provides a method for doing this by passing the -n or --dry-run options. The -v flag, which means “verbose”, is also necessary to get the appropriate output. You’ll combine the a, n, and v flags in the following command:

rsync -anv dir1/ dir2

Copy

Output

sending incremental file list

./

file1

file10

file100

file11

file12

file13

file14

file15

file16

file17

file18

. . .

Now compare that output to the one you receive when removing the trailing slash, as in the following:

rsync -anv dir1 dir2

Copy

Output